Association Rule

An expression of the form

-

Support (sup): Fraction of the N transactions that contain both A and C.

-

Confidence (conf): Measures how often all the items in C appear in transactions that contain A.

-

Rules with low support can be generated by random associations

-

Rules with low confidence are not really reliable

-

Nevertheless a rule with relatively low support but high confidence can represent an uncommon but interesting phenomenon

Apriori principle

If an itemset is frequent, then all of its subsets must also be frequent

- It holds due to the following property of the support measure:

- The Support of an itemset never exceeds the support of its subsets

- This is known as the anti-monotone property of support

Statistical Independence

Statistical independence Positively correlated Negatively correlated

Statistical-based Measures

Lift

- lift evaluates to 1 for independence

- insensitive to rule direction

- it is the ratio of true cases w.r.t. independence

Leverage

- leverage evaluates to 0 for independence

- insensitive to rule direction

- it is the number of additional cases w.r.t. independence

Conviction

- conviction is infinite if the rule is always true

- sensitive to rule direction

- it is the ratio of the expected frequency that A occurs without C (that is to say, the frequency that the rule makes an incorrect prediction) if A and C were independent divided by the observed frequency of incorrect predictions

- also called novelty

conclusion

- higher support

rule applies to more records - higher confidence

chance that the rule is true for some record is higher - higher lift

chance that the rule is just a coincidence is lower - higher conviction

the rule is violated less often than it would be if the antecedent and the consequent were independent

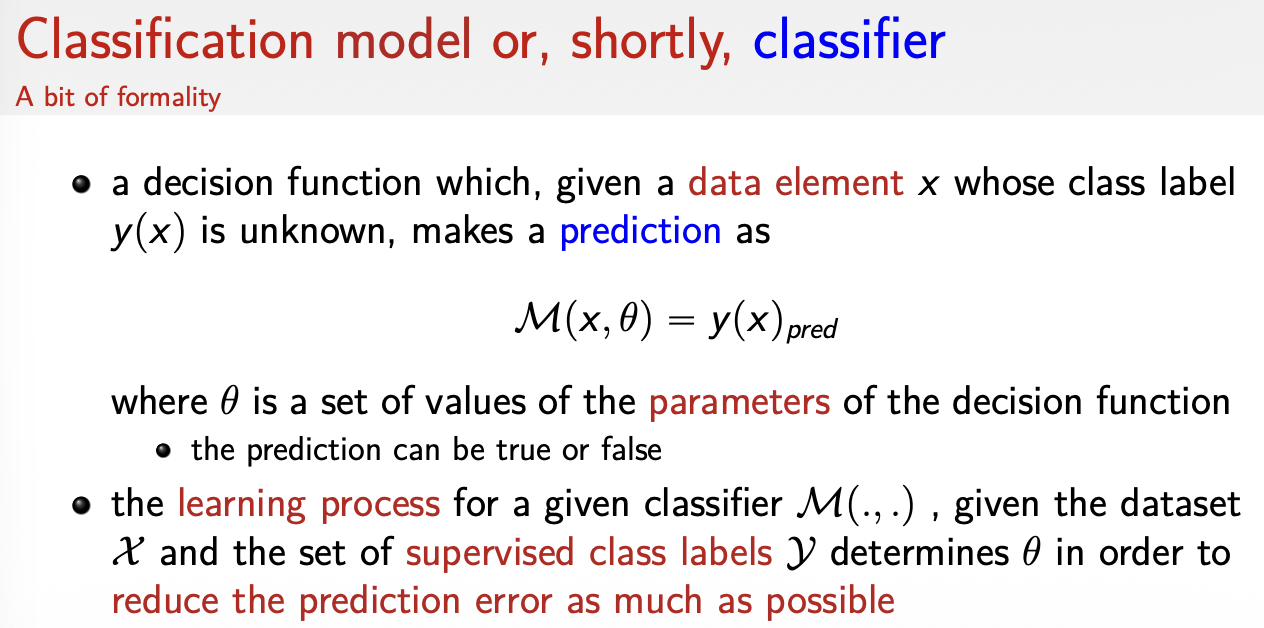

Classification

All models are wrong, but some are usefull.

Activation Function Comparison Table

| Activation | Output Range | Derivative Range | Zero-Centered? | Common Use |

|---|---|---|---|---|

| Sigmoid | (0, 1) | (0, 0.25) | No | Binary output, probability modeling |

| Tanh | (-1, 1) | (0, 1) | Yes | Hidden layers (small networks) |

| ReLU | [0, +inf) | 0, 1 | No | Default for deep hidden layers |

| LeakyReLU | (-inf, +inf) | alpha, 1 | Partially | Fix for dying ReLU |

| Arctan | (-pi/2, pi/2) | (0, 1) | Yes | Smooth alternative to tanh |

| Softmax | (0, 1), sum=1 | Complex | No | Multi-class output layers |